David Piuva's Software Renderer

It's harder to use in the beginning because you have to learn CPU optimization to make your own real-time filters, but much easier to maintain in the long run by not having heavy dependencies scattered everywhere in the system. It has most of the basic features in 2D, 3D and isometric rendering and some additional GUI and window management to make it easier to use. If a new operating system comes out, you just need to write a new window backend uploading the canvas image and handle basic mouse and keyboard interaction.

So why don't we just use the same technique on a GPU to make it even faster with more performance?

Using the same technique on the GPU will actually slow rendering down, because the fixed function hardware cannot be turned off.

Ordering memory for linear cache reads does nothing on a GPU because its cache is multi-dimensional.

GPUs dislike sampling transparent pixels, so pre-rendering actually hurts GPU performance by doing more work in total.

Reading depth per pixel from an image disables the GPUs quad-tree optimization.

In other words, the GPU cannot beat the CPU for isometric rendering when it comes to speed, and speed is all that GPUs are good at.

Disadvantages of software rendering

* CPUs cannot beat GPUs for what they are actually made for, rendering complex 3D graphics quickly in high resolutions. But if you're mostly writing retro 2D games, this point makes little sense when you are limited by the screen's refresh rate either way.

* GPUs are easier to optimize shaders for, because the graphics card will just throw more calculation power at the problem in a lower frequency. Learning to optimize image filters on a CPU will however be a useful skill if you plan to work with safety-critical computer-vision.

* GPUs can work while the CPU does other things. This point only applies if both rendering and game logic is heavy. Most games that are heavy on game logic are just poorly optimized from relying on scripts in performance bottle-necks, thrashing heap memory and abusing generic physics engines.

Benefits to software rendering

* No graphics drivers required. I once wrote a text editor that required the latest version of Direct3D just to start, only to realize that the best looking effects were pixel-exact 2D operations. Felt really stupid, but then began thinking about writing a software renderer for the majority of software where GPU graphics is total overkill and a huge burden for long-term maintenance.

* Future proof without any missing libraries. My first software rendered applications I wrote for Windows 3.1 still work in both newer versions of Windows and using compatibility layers in Linux. My first 3D accelerated games developed for Windows 2000 stopped working when Windows XP came out just a few years later, due to a bug in a third-party dependency that I could not access. This modern software renderer uses all that experience to give you both reliable programs and good looking graphics.

* Pre-rasterized isometric rendering techniques are actually 2D operations under the hood, which puts the CPU on par with the GPU even if it uses the same optimization. When both are capable of displaying more triangles than pixels with a higher frequency than the monitor can display, the only remaining advantage for the GPU is multi-tasking between CPU and GPU.

* CPU rendering can be more deterministic by linking statically to all rendering algorithms. If only using integer types, it can be 100% bit exact between different computers. Higher determinism also unlocks optimizations that would be too risky on old OpenGL versions, such as dirty rectangles and passive rendering.

* CPUs have a higher frequency, which means that it's actually faster than a GPU for low-resolution 2D rendering where the amount of work per draw call is not significant enough to benefit from the GPU. You can reduce the number of draw calls on a GPU using hardware instancing, but it's much easier to just use CPU rendering with a lower call overhead and keep your code well structured.

* No graphics context required, just independent resources. This allow separating your program into completely independent modules without strange side-effects, which improves testability and quality.

* No messy feature flag workarounds in games. Every computer has access to all graphical features, because it is trivial to abstract away which SIMD instructions are called to perform the same math operation in the hardware abstraction layer.

* No device lost exceptions. The CPU will not randomly tell you that it had amnesia and lost all your data.

* No need to mirror changes between CPU and GPU memory with complex synchronization methods to hide the delays of memory transfer. The cache system handles all that for you.

* Can modify the whole graphics pipeline without having to build your own graphics card. This allows learning more about how computer graphics works under the hood.

Why use this software renderer instead of other software renderers

Unlike most modern software renderers, this one is not just another by-product of someone's curiosity nor GPU emulator. This renderer was created because both graphics APIs and media layers available to Linux were too unstable, non-deterministic and complex to actually be used. A lot of developers abandoned using graphics APIs directly when Direct3D12 and Vulkan came out due to the complexity and OpenGL is a broken mess where literally no feature works the same on every graphics driver. I needed something that was well defined without feature flags, random crashes and heavy dependencies. It was important that the end user didn't have to install anything on the operating system while still having a graphical user experience.

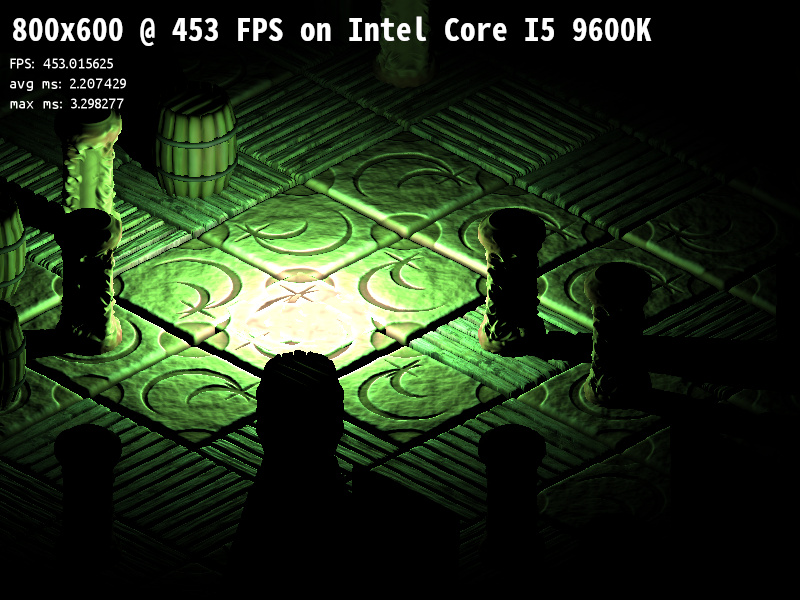

Doing what the CPU is good at in 450 FPS instead of trying to be a GPU in 30 FPS

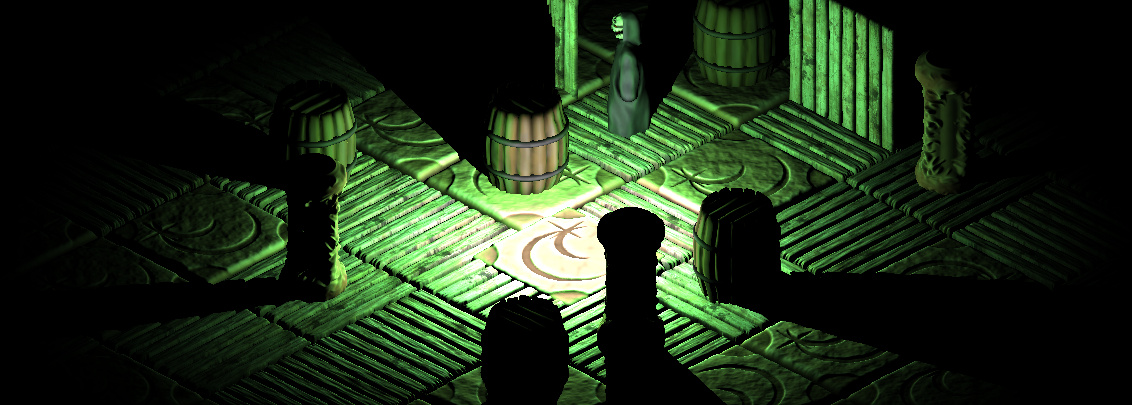

Most software renderers only try to replicate what the GPU is good at and therefore get around 30 frames per second without any interesting light effects. This library has that too, in case that you need perspective, but it also has depth buffered 2D draw calls and an example of how to use it for an isometric rendering technique using deferred light.

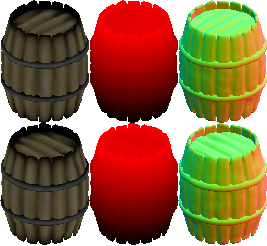

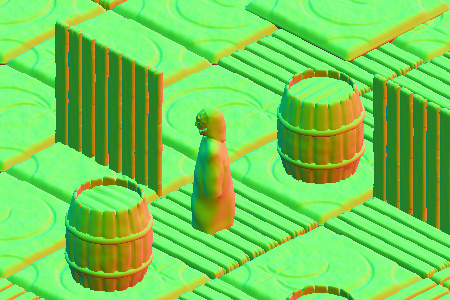

Isometric rendering on the CPU can get hundreds of frames per second with unlimited detail level and heavy effects by avoiding the things that CPUs are bad at. By pre-rasterizing models with fixed camera angles into diffuse, height and normal images, deep sprites can be drawn very quickly by reading memory in a linear cache pattern.

Minimal dependency

Unlike some other software renderers, you do not need to install any GPU drivers for this software renderer to work. Static linking to the library makes sure that you don't need to install any third party dependencies, so no installer is needed, just copy the files and run. Only the most essential features (mouse, keyboard, title, windowed, full-screen) are integrated natively on each platform, because it should be easily ported to future operating systems.

Supported operating systems

Linux is fully supported. Tested on many different Linux distributions, both Debian and Arch derivatives, from Intel based desktops to embedded ARM devices. Most Linux distributions come with the GNU compiler toolchain pre-installed so that you just give access permissions and run your compilation script on a new computer.

Microsoft Windows is fully supported. Tested on Windows 11 but only calls functionality that existed on Windows XP (2001 AD).

MacOS is supported with the same functionality as on Windows and Linux, but lacks features that are specific to MacOS, such as the fullscreen button and menus outside of the window. Tested on Sequoia and Tahoe.

Target hardware

Optimized for Intel/AMD CPUs using SSE2, SSSE3, AVX and AVX2 intrinsics.

Optimized for ARM CPUs using NEON intrinsics.

Also works without SIMD extensons by having a reference implementation using repeated scalar operations.

Sneak previews

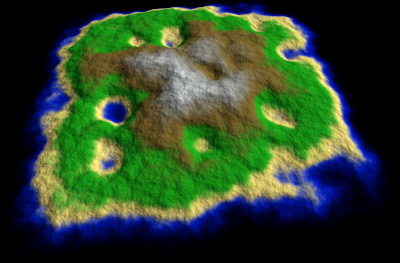

Just to see how far full perspective rendering can be pushed with CPU rendering, I created a test project with screen-aligned detail tesselation.

The dynamic ground texture used for shading follows the view frustum with multiple resolutions and allow blend mapping multiple materials before generating screen-aligned bi-linear polygons.

More triangles than the screen can display in 1080p at around 80-100 frames per second.

The ground uses depth-sorted anti-aliasing instead of brute-force sampling, which reduces the need for anisotropic filtering.

When having this many triangles in software rendering, you also don't need perspective correction per pixel.

Not sure if a reusable engine can be created out of this, because it had to lock the camera's Y axis for aligning polygons with the screen.

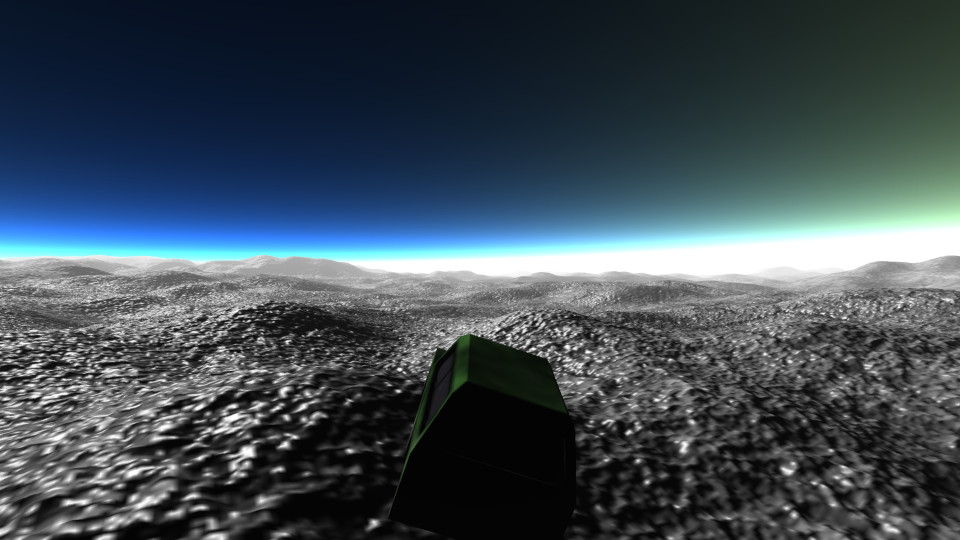

Just to see how far full perspective rendering can be pushed with CPU rendering, I created a test project with screen-aligned detail tesselation.

The dynamic ground texture used for shading follows the view frustum with multiple resolutions and allow blend mapping multiple materials before generating screen-aligned bi-linear polygons.

More triangles than the screen can display in 1080p at around 80-100 frames per second.

The ground uses depth-sorted anti-aliasing instead of brute-force sampling, which reduces the need for anisotropic filtering.

When having this many triangles in software rendering, you also don't need perspective correction per pixel.

Not sure if a reusable engine can be created out of this, because it had to lock the camera's Y axis for aligning polygons with the screen.

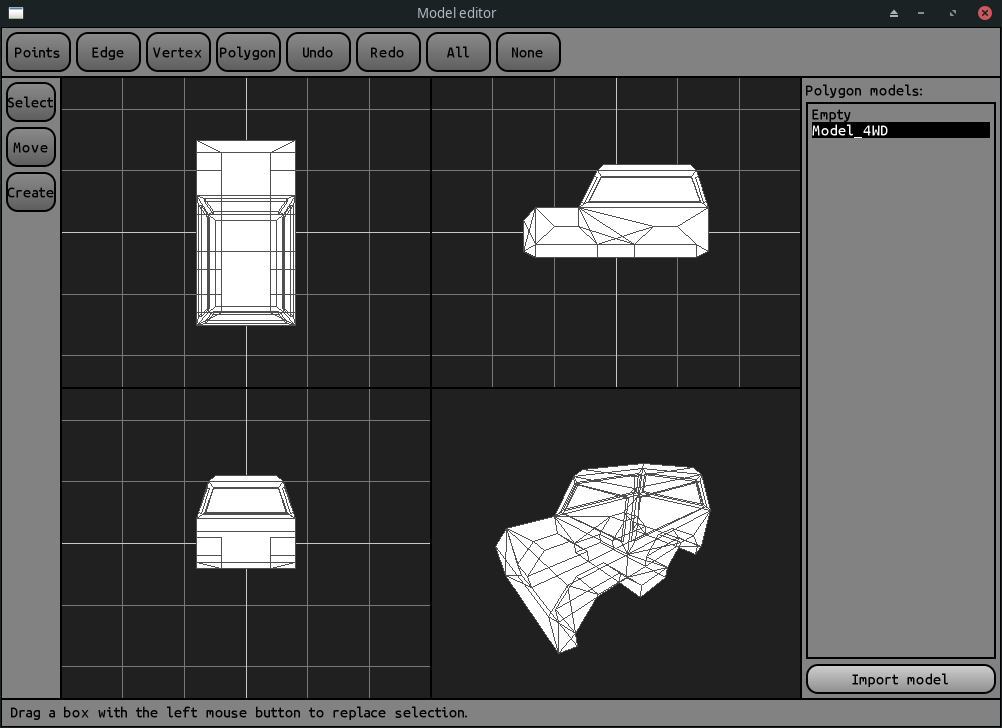

The model editor is still far from ready for a first release, because large changes are needed to improve the usability before anyone can start to learn it.

It is also good to wait with releasing the first model format, because more features in the first version means less messy workarounds for when features are not supported by an older editor.

The model editor is still far from ready for a first release, because large changes are needed to improve the usability before anyone can start to learn it.

It is also good to wait with releasing the first model format, because more features in the first version means less messy workarounds for when features are not supported by an older editor.

Project links

The library is supposed to be built from source code using relative paths, just like any other part of your own project. This makes sure that any changes you make to the library will not require any additional steps for building. There are no executable binaries to download for DFPSR, which improves both security and compatibility with future operating systems. It is all compiled from source code that you can inspect yourself before building on your own computer.

Source code: https://github.com/Dawoodoz/DFPSR